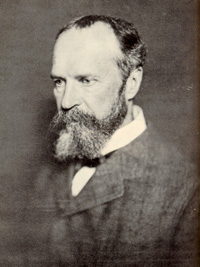

William James entered the field of psychology not with a bang or an explosion but as the morning dew distilling upon fields of clover. He was a reluctant psychologist, who did not want to even be called a psychologist, but he forever changed the course of modern psychology. William James not only changed the course of American psychology, in some ways he was the Course. James did not produce original research, he did not perform experiments, yet he became the driving force behind psychology. How did he accomplish this feat?

William James entered the field of psychology not with a bang or an explosion but as the morning dew distilling upon fields of clover. He was a reluctant psychologist, who did not want to even be called a psychologist, but he forever changed the course of modern psychology. William James not only changed the course of American psychology, in some ways he was the Course. James did not produce original research, he did not perform experiments, yet he became the driving force behind psychology. How did he accomplish this feat?

James was born to wealthy parents. His grandfather was one of the richest men in America and William’s father inherited a considerable sum of money. William’s father did not fall into the frivolous failings of inherited money though. He was very involved in his children’s lives, educating them himself in matters both temporal and spiritual. He was not an indulgent father but allowed the children to make their own decisions about life and to formulate their own ideas. He also provided them with opportunities to experience the world’s cultures and diversities. William and his family took a number of trips to Europe in order to be exposed to languages, cultures, arts, and philosophies.

William became interested in art initially. He wanted to become an artist but after studying some in Paris, he decided that, while he was good, he was not good enough. So he decided to become a scientist. He started attending Harvard, where he worked with different scientists. He discovered that he abhorred scientific experimentation, finding it tedious. He appreciated the work of other scientists but did not want to do any experimentation himself. After graduation he started medical school at Harvard. While he enjoyed the subjects he studied, William did not want to be a practicing physician; he decided he liked philosophy best. James had by then also been exposed to the work of the great German psychologists like Wilhelm Wundt and was impressed by their research. Continue reading “William James’ Legacy”

The man had committed a crime and was sentenced to pay. A crowd gathered to watch his punishment. There standing before him was the fateful Madame, the progeny of a French engineer. This Woman with the acerbic jaw was to seal the criminal’s fate. He faced the crowd wide-eyed and fearful, pleading for his life. His pleas seemed to fall on deaf ears as the frenzied crowd prepared for the spectacle. A German man stood waiting to play his part. Theodor Bischoff was not there to enjoy the public execution, he was there in the name of science. As the executioner led the criminal to the apparatus named after Joseph Guillotin (who by the way did not invent the guillotine), Bischoff approached. The blade fell and the criminal’s head dropped to the ground. Bischoff quickly rushed over to the head to perform his experiment.

The man had committed a crime and was sentenced to pay. A crowd gathered to watch his punishment. There standing before him was the fateful Madame, the progeny of a French engineer. This Woman with the acerbic jaw was to seal the criminal’s fate. He faced the crowd wide-eyed and fearful, pleading for his life. His pleas seemed to fall on deaf ears as the frenzied crowd prepared for the spectacle. A German man stood waiting to play his part. Theodor Bischoff was not there to enjoy the public execution, he was there in the name of science. As the executioner led the criminal to the apparatus named after Joseph Guillotin (who by the way did not invent the guillotine), Bischoff approached. The blade fell and the criminal’s head dropped to the ground. Bischoff quickly rushed over to the head to perform his experiment.